Just goes to show you can’t trust the white coats and their “trust the science” lies, and especially the pharma prostitutes who they love to feature on megacorp news. So they murdered many unborn babies with this gene therapy, and they went out of their way to recommend it to pregnant mothers while assuring them it was safe and effective. And it wasn’t just the babies, as millions have died with many more suffering from having their immune system hijacked with RNA and DNA garbage that was in the shots because of poor manufacturing processes, not to mention the chemicals they used to stabilize and deliver the RNA and DNA fragments. And they’re planning to do it all again as they made so many biliions without penalty, as they’re indemnified. And the OCGFC running these pharma megacorps are also eugenicists, so mission accomplished. Consequently, in the states they used their megacorps to force vaccinations, but next time they plan to use the government as was done in many other countries.

JAMA provides an expert example. They nearly got away with it. But then we read it.

By James Lyons-Weiler, PhD

Let me walk you through a masterclass in how to bias a study so thoroughly that even a real risk can disappear. Not with fraud, not with data tampering—just good old-fashioned design flaws, all pointing in one direction. (I will leave it to the reader to decide on this). We’re going to talk about a study recently published in JAMA Network Open by Bernard et al. (2025), which claims that getting an mRNA COVID-19 vaccine during the first trimester of pregnancy isn’t associated with birth defects.

You might have heard this result and thought, “Well, that’s reassuring.”

Let me show you why it’s not.

The Setup: A Live-Birth Only Study

The researchers looked at over 527,000 live-born infants in France, comparing those whose mothers got an mRNA COVID-19 vaccine in the first trimester with those who didn’t. They then looked at the rate of major congenital malformations (MCMs) and said: “No difference.”

Their conclusion? The vaccine isn’t teratogenic. It doesn’t cause birth defects.

Here’s the problem: They only looked at live births. That means any fetus with a severe enough defect to lead to termination or fetal death is simply not counted. And guess what? The most severe defects—the ones most likely to show up if a vaccine did have a harmful effect—are the ones most likely to lead to termination or stillbirth.

Let me repeat that: They deleted the most relevant cases before the analysis even started.

The Scale of the Omission

We don’t need to guess how big this omission is. France participates in EUROCAT, a European congenital anomaly surveillance system. From their own data:

- About 8.35 per 1,000 pregnancies end in termination for fetal anomaly (TOPFA).

- Another 1.11 per 1,000 end in perinatal death with a malformation.

That’s 9.46 per 1,000 anomaly-affected pregnancies removed by design.

If you want that as a percentage, it’s about 32% of all anomaly-affected pregnancies.

So when Bernard et al. say they found no increased risk in their cohort, they’re only looking at the surviving 68% of anomaly cases. That’s like claiming a ship is safe because most of the survivors didn’t drown—without counting the ones who never made it onto the lifeboats.

The Bias Multiplier: A Lesson in Selection Bias

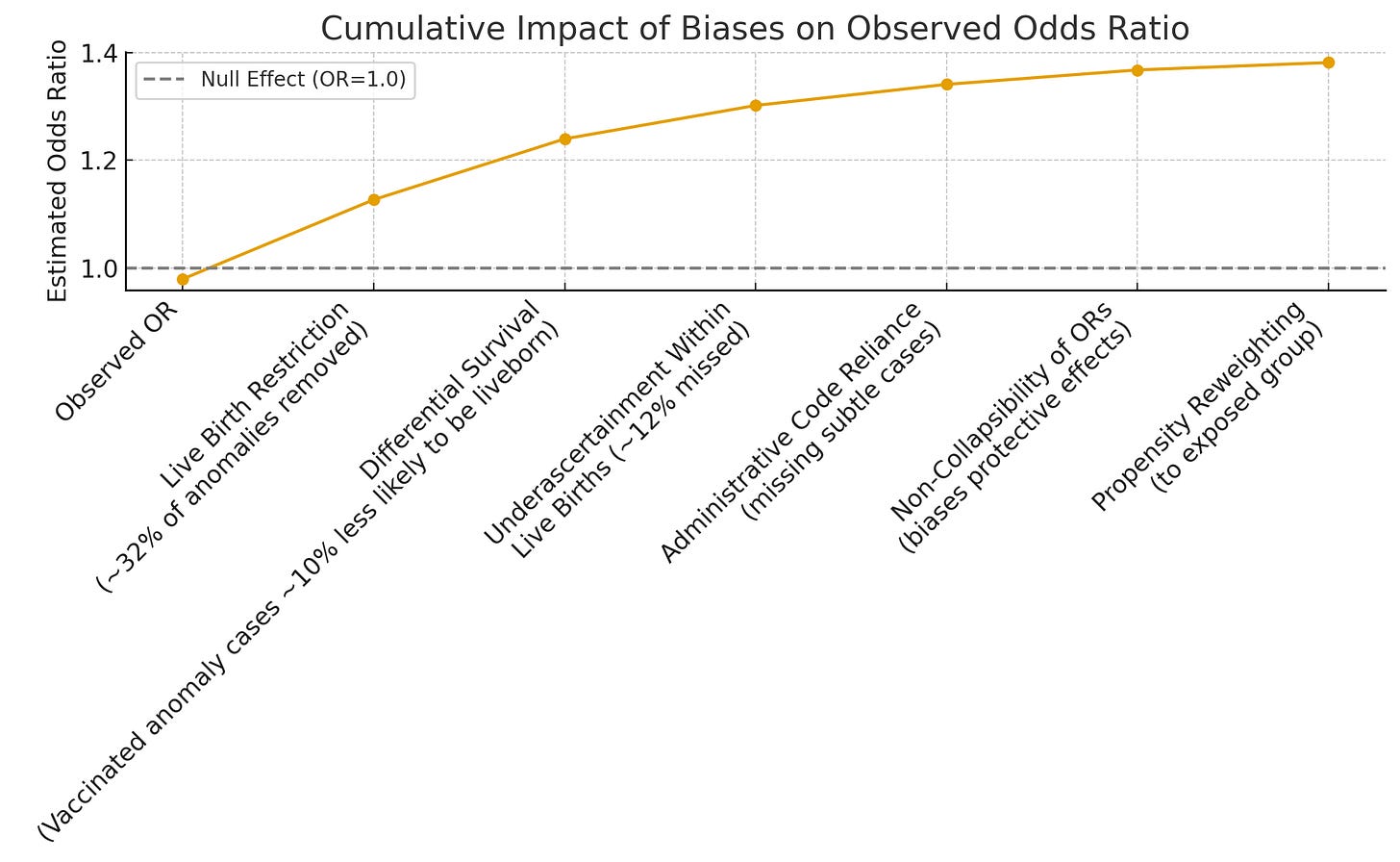

Here’s how this works, mathematically. The observed odds ratio in a live-birth-only study (OR_obs) equals the true odds ratio (OR_true) multiplied by a bias factor. If vaccinated pregnancies are even 10% less likely to deliver an anomaly-affected infant alive (due to higher termination or stillbirth), the observed odds ratio gets pulled down.

Quick math:

- OR_obs = 0.98 (what they reported).

- 10% lower survival of affected pregnancies in vaccinated group → OR_true ~1.10.

- 20% lower → OR_true ~1.23.

That’s all it takes to turn a real signal into “nothing to see here.”

And remember, France’s surveillance data already tells us that a third of anomaly-affected pregnancies never show up as live births. So the odds that vaccine-exposed fetuses with defects were underrepresented is not speculative—it’s baked into the design of analysis. Like we have seen so many times before.

Historical precedent makes clear that live-birth-only studies consistently underestimate teratogenic risk. For drugs such as valproate, isotretinoin, and thalidomide, early safety studies that limited analysis to surviving infants failed to detect strong signals of harm. Only when pregnancy registries began systematically including terminations for fetal anomaly (TOPFA) and stillbirths did the full scope of risk become apparent. In the case of isotretinoin, for example, the prospective Motherisk and EUROCAT registry data revealed a striking prevalence of central nervous system and craniofacial defects—patterns that were underrepresented or entirely missed in live-birth analyses. Similarly, valproate’s association with neural tube defects was underestimated until registry-based studies incorporated non-live outcomes and stratified by timing of exposure.

This history isn’t anecdotal—it’s methodological canon. The WHO and EMA both emphasize the necessity of including prenatal losses in teratogenicity surveillance for precisely this reason. The Bernard et al. study, by analyzing only live births and excluding all pregnancies ending in fetal death or termination, repeats the same early-stage design errors that delayed recognition of harm in past pharmacovigilance failures. The claim that “no increased risk” exists should therefore be interpreted not as evidence of absence, but as a predictable artifact of an exclusionary analytic frame—a frame that, in prior contexts, has allowed dangerous exposures to persist unchallenged for years.

Other Ways They Softened the Signal

Let me show you how every other decision they made also worked to hide any possible risk:

- Administrative Codes: They used billing codes to detect malformations. These miss subtle or late-diagnosed anomalies. That means more false negatives.

- First-Year Detection Window: Most anomalies were only looked for in the first year of life. Some don’t get diagnosed that early.

- Odds Ratios: For rare outcomes, odds ratios can understate elevated risks and overstate protective effects. A technical quirk—but one that always softens the blow.

- Propensity Weighting: They reweighted the comparison group to look like the vaccinated group (older, less deprived, more prenatal care). But more screening means more diagnosis—and they still found slightly lower defect rates in the vaccinated group. That tells you the bias is stronger than the screening.

When every source of bias points in the same direction—toward the null or even “protective”—you don’t have evidence of no risk. You have evidence of bias overpowering signal.

What Happens When You Correct for This?

We ran the numbers. If you apply just a 10% selection correction to their published values, here’s what you get:

- Cardiac anomalies: OR rises from 1.01 → 1.12

- Limb anomalies: 1.09 → 1.21

- Hip dislocation: 1.23 → 1.37

All of those cross into elevated risk territory. And that’s with only 10% differential selection. A 20% difference pushes those even higher.

If you’re thinking, “Could vaccinated pregnancies really be 10% more likely to terminate anomaly-affected fetuses early?” The answer is yes. And again, France’s EUROCAT data shows 32% of anomaly-affected pregnancies are not live births.

So we’re not imagining the bias. We’re quantifying it.

Multiple Hypothesis Testing Can Mask

The authors conduct a massive battery of statistical tests—75 individual major congenital malformation (MCM) comparisons, 13 organ system comparisons, and 78 stratified subgroup comparisons—yet report only six statistically significant results, all in the direction of reduced risk. They dismiss these as likely type I errors arising from multiple comparisons. But the omission here is twofold: first, they fail to apply any formal correction for multiple testing (such as Bonferroni or Holm), despite the sheer volume of comparisons warranting it; and second, they neglect to explore what the expected distribution of false positives would look like under a truly null association. With over 160 comparisons, even a conservative α=0.05 threshold implies that 8–10 significant results should appear by chance alone. That none of these suggest elevated risk—even weakly—is statistically improbable and warrants scrutiny.

This absence of positive findings across so many comparisons is, paradoxically, a signal in itself. If noise alone were at play, we would expect both upward and downward fluctuations—some false harms, some false protections. The asymmetric outcome (only protective results reach nominal significance) suggests not random error, but systematic bias in effect estimation, reporting, or both. It implies either suppression of true positive associations or design artifacts (like live-birth conditioning or exposure misclassification) so powerful that they erase even chance-level appearance of harm. In this light, the authors’ insistence that their null results are “reassuring” fails not only in design logic, but also in basic statistical expectations.

The Bottom Line

This isn’t a smoking gun. It’s a missing body count. When you build a study that excludes the most severe cases, undercounts the ones that remain, and smooths the rest with statistical weighting, you don’t get a “safety study.” You get a safe-looking study.

Methodological bias in a study can either be due to incompetence or fraud. When all of the biases point in the same direction, well I’ll leave it to the reader to decide . Either way, the study is biased—in every direction that would help a null result come out looking “reassuring.”

If you’re a policymaker, a journalist, or a pregnant woman trying to make informed choices, understand this: A study that doesn’t count all the outcomes can’t make all the claims.

Especially not about safety.

Citations and data sources:

- Bernard et al., JAMA Netw Open. 2025;8(10):e2538039.

- EUROCAT Key Indicators: France, 2018–2022.

- Heinke et al., Paediatr Perinat Epidemiol. 2020.

- Velie & Shaw, Am J Epidemiol. 1996.