A history of computer processing in relation to businesses and servers with the interesting trend away from X86 processors to custom Arm architecture by the large cloud providers to lower costs. But the new open source instruction set RISC-V may be positioned to be the next step to take over.

If Arm’s licensable technology is a parallel to the rise of RISC/Unix, then perhaps RISC-V, which has an open source instruction set and logic blocks, is analogous to Linux. Arm may be set up for a good decade long run in the datacenter, at the edge, and in our client devices, but watch out for RISC-V. Ten years from now, we might be writing the same story all over again, with one more historical ring wave added. In fact, it is hard to imagine any other alternative on the horizon.

https://www.nextplatform.com/2022/09/22/arm-is-the-new-risc-unix-risc-v-is-the-new-arm/amp/

Timothy Prickett Morgan

When computer architectures change in the datacenter, the attack always comes from the bottom. And after more than a decade of sustained struggle, Arm Ltd and its platoons of licensees have finally stormed the glass house – well, more of a data warehouse (literally) than a cathedral with windows to show off technological prowess as early mainframe datacenters were – and are firmly encamped on the no longer tiled, but concrete, floors.

For modern corporate computing, Day One of the Big Data Bang comes in April 1964 with the launch of the System/360 mainframe. Yes, people were farting around with punch cards and tabulating machines for 75 years and had electro-mechanical computation, and even true electronic computation, before then. But the System/360 showed us all what a computer architecture with hardware and software co-design, with breadth and depth and binary compatibility across a wide range of distinct processors, really looks like. And by and large, excepting a change in character formatting from EBCDIC to ASCII, a modern computer (including the smartphone in your hand) conceptually looks like a System/360 designed by Gene Amdahl that had a love child with a Cray-1 designed by Seymour Cray.

But that is not the point.

What is the point is that as the richest companies in the world adopted the System/360 to create back office systems, getting rid of a lot of manual processes that come with running a Fortune 500 or Global 2000 enterprise (thus eliminating a huge amount of human costs) while also allowing for data to be stored and used on a scale never seen before to actually drive the business instead of just watching it.

Waves Upon Waves

And this first wave of true corporate computing made IBM fabulously rich and the bluest of the blue chips stocks that the world has ever seen. And will ever see. And hence there were so many clones of the System/360 architecture.

Digital Equipment, an upstart maker of proprietary – and interactive – minicomputers established in 1958, before the mainframe era started and aiming its wares at the technical market, was having none of that. With the launch of the PDP-11 in 1970 and the VAX in 1977, high performance programming became affordable for the masses, and a wave or proprietary minicomputers came out of everywhere, swamping the mainframe on all sides and doing new kinds of work. (Cheaper computing always drives new workloads. AI is but the latest example.) In response, IBM had three different minicomputer lines itself, and this wave democratized corporate computing across small and medium businesses in a cost-effective way that a mainframe never could.

The success of the proprietary minicomputers spawned a new breed of server makers, using computationally efficient RISC processors and operating systems that hewed to the Unix standard to various degrees that started off in scientific workstations in the mid-1980s.

Sun Microsystems, Hewlett Packard, and a small British company called Acorn RISC Machines (“from little acorns big oak trees grow”) led the RISC/Unix charge, and now the mainframes and minicomputers were under attack again. The attack was so successful that eventually IBM, DEC, Fujitsu, NEC, Bull, Siemens, and everyone who was selling systems had a RISC/Unix line. RISC/Unix peaked at 45 percent of the revenue of the worldwide server market in the early dot-com years – every company had a Unix backend with an Oracle relational database driving their Web sites.

But several years before that moment, the Intel X86 PC on our desktops got tipped on its side and jumped into the datacenter. Intel expanded and hardened the X86 architecture, which was not initially as capacious or reliable as the RISC chips made by Sun, HP, Data General, ARM, and others. It didn’t need to be as computing on the Web was more distributed. What the Web-scale world needed was for datacenter compute to be was cheaper, and the X86 architecture was certainly cheaper than the RISC/Unix machines of the time.

The relative affordability and absolute compatibility of the X86 architecture literally transformed the world. By the time the Great Recession was roaring in early 2009, X86 servers accounted for about half of the $10 billion in quarterly sales of systems worldwide. And then the next wave of Internet innovation hit, and these non-X86 architectures dwindled ever so slowly – still including pretty big IBM mainframe and minicomputer businesses, oddly enough – to about $2.5 billion per quarter, but the X86 market rose exponentially to be between $20 billion and $25 billion a quarter these days. And that is why the X86 architecture accounted 99 percent of server shipments and 93 percent of revenues at its peak in 2019, according to data from IDC.

Yup, peak X86 was three years ago. And now the Arm CPU architecture that is in our tablets and smartphones and that took over as the embedded controller of choice from PowerPC, which displaced the Motorola 68K – and all four CPU architectures famously used in Apple machines – is pulling the X86 off that peak even as server volumes and revenues keep growing.

If you ever wonder why Intel chief executive officer Pat Gelsinger is so hot to trot with opening up the Intel foundry business, this is why. Because, as we have been saying for more than a decade now, when Arm comes into the datacenter, another profit pool is going to be eliminated and the money is just going to end up in someone else’s pocket. It happened to IBM, DEC, Sun, IBM again, and now it will happen to Intel and AMD, too. If server CPUs can’t command the kinds of margins they have for the past decade and a half for Intel, then manufacturing server CPUs for those rich enough to design their own – and who won’t be buying Intel Xeons except for massive legacy Windows Server and Linux workloads that won’t move to the Arm architecture ever – might be.

The good news for Intel is that X86 infrastructure will always command a premium over Arm infrastructure because of that legacy code, mostly running on Windows Server, that neither Microsoft nor its customers want to move to the Arm platform. They all lived through trying to port to Itanium and they are not going down that rathole again, no matter how good the PR story is.

But for new workloads, forget it. Arm will very quickly become the CPU of choice on the clouds and among the hyperscalers unless Intel radically cuts prices on Xeon SP CPUs. With a 30 percent to 40 percent price/performance advantage for equivalent performance for a cloud instance, why would you deploy on an X86 instance instead of an Arm instance? Perhaps you buy a Xeon SP – and a back generation one at that – because AMD is sold out of Epyc CPUs and that is all you can get?

The Third Wave Of Arm Server Processors

This is the world we live in as the third wave of Arm servers is actually crashing through the datacenter doors, as we talked about last week when Arm Ltd, the company that licenses the Arm instruction set and Neoverse core, mesh interconnect, and other server CPU design blocks, unveiled some of the specs on its future designs, including the N2, V2, and E2 cores and their follow-ons.

In this follow-on story to the Neoverse roadmap update, we are concerned with the competitive environment and how Arm is getting more traction now, and why.

Chris Bergey, general manager of infrastructure at Arm Ltd, set the competitive environment thus:

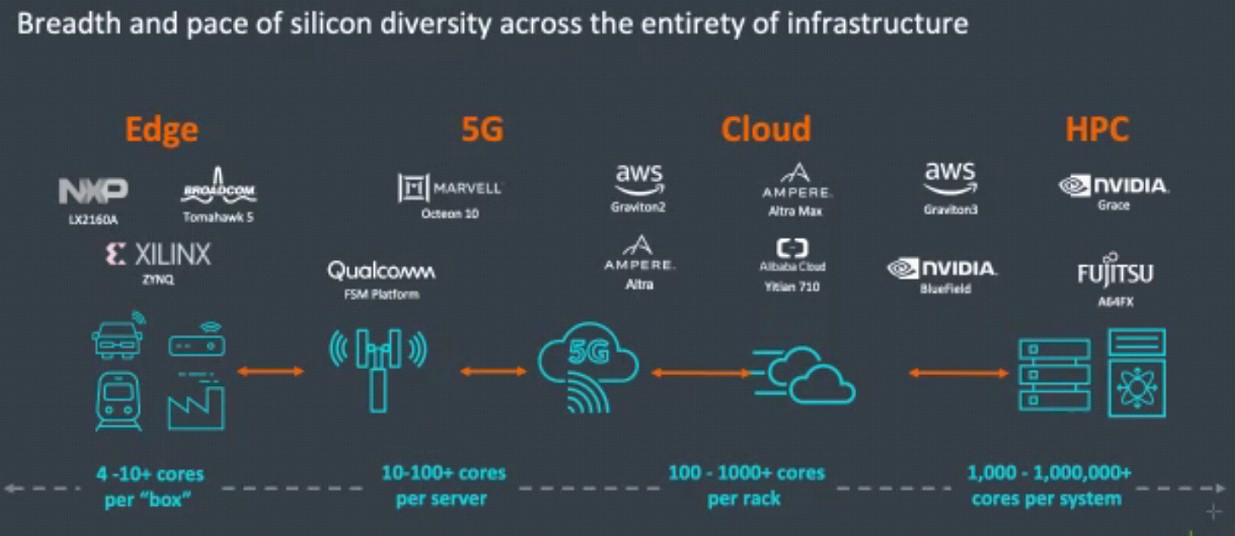

“Today’s infrastructure is custom built, from SSDs to HDDs to DPUs to video accelerators. The last standard product, the server CPU, will not cut it as a general purpose product moving forward. Power consumption is already too big of an issue. Hyperscalers spend 30 percent to 40 percent of their entire TCO on power, and it’s only slightly better for telco network operators. Data rates are advancing too fast. Compute workloads are on a relentless march higher, and they’re becoming much more complex. Machine learning and AI are taking over. The future of infrastructure must look nothing like the past. It needs a redefinition. Infrastructure will need to be ubiquitous. The cloud will continue to exist in mega datacenters. But our entertainment experiences, transportation, and the way we communicate will be transformed by the build out of the edge. It will be accelerated to support the immersive, visual, tactile, real-time experiences being dreamed up by AR and VR creators. It will be power efficient, and DPUs show us how to get there.”

James Hamilton, distinguished engineer at Amazon Web Services, explained in a recent semiconductor conference, why the cloud giant decided to get into CPU design itself, and it explains why others have followed – Alibaba comes to mind with its Yitian homegrown Arm server chip – or have hitched their wagons to other Arm chip designers such as Ampere Computing or HiSilicon. Here is what Hamilton said, and it is the most important thing AWS has said in years, so we are quoting him at length:

“This industry is all about volume. Any time you can do anything at scale, it opens up more R&D investment. You can do more for customers. And really, the reason why I am still at AWS more than 13 years later is because every time we grow, it makes more things possible. We could have never done semiconductor work if our customers didn’t trust us at extreme scale to run their workloads. And because of that, we can invest more. And so really, the same thing is going on at AWS that is going on at Arm. Great work brings lots of customers, allows more innovation and more great work follows from it. The conclusion is preordained: Arm’s mobile volumes are going to feed the R&D investment necessary to produce great server processors. So that is the first thing.”

“The second context point is different, and it is just an observation. It doesn’t sound scary, but for me it is. And that is this: Servers are big, complicated devices that fit on a board. They used to be big, complicated devices that used to be the size of a refrigerator. They’re getting smaller and in fact what is happening is that the server on a board is more and more is being SoC’d up onto the package. Eventually – this will take time – but eventually, in my opinion, a server is going to be a system on a chip. It will all come up off the board and land on a chip. Servers today are quite a long way from that – they are. But I have observed over the years that what happens in mobile ends up happening in servers, it just takes five to ten years. Many of us are convinced this is definitely going on. Think about it: Why is this important to us? We have been doing custom servers for a long, long time. We deliver more value to customers because we do custom servers. If all of the innovation in the server is being pulled up on chip, and we don’t build chips, we don’t innovate.”

And with that argument, back in 2013, AWS started going down the road to custom hardware that led to the company acquiring Annapurna Labs and designing its own compute, networking, and storage engines. (And we think possibly its own switch ASICs.)

Not to pick a historical bone with Hamilton, who probably knows more about hardware and software infrastructure than anyone else on Earth, maybe it is more accurate to say that the attack on the datacenter always comes from the outside in, and that any technology innovation done in one ring moves in successively until it transforms the datacenter. Similarly, some datacenter technologies move from the inside out – like the basic computer architecture – and then a wave hits a hard problem out there and comes back with a slightly different wave that is absorbed in the other tiers as an idea proves successful.

Our point is that this is not just about mobile affecting servers. John Cocke created a Reduced Instruction Set Computing architecture to try to make CPUs more efficient in the experimental 801 system in the 1970s, which ended up being used in IBM mainframe controllers and not much else. And along with the help of luminaries like David Patterson, Complex Instruction Set Computing (it wasn’t called until RISC made a distinction necessary) that prevailed in all CPU designs was replaced by the RISC method. Even the venerable X86 is a RISC computer at heart that is pretending to be a CISC machine, and has been for decades. We would not be surprised if IBM is, under the covers, doing the same thing with its System z mainframe motors.

Waves flow back and forth between the computing tiers as new technologies are dropped into the IT ocean. Proprietary minicomputers brought cheaper CPUs and interactive programming to new workloads, and these ideas are absorbed by the datacenter. RISC/Unix machines that were designed as technical workstations, but were beefed up into servers to bring more power efficient computation and unified operating system APIs to the datacenter. The PC microprocessor got bigger, faster, and more reliable and it brought a universal substrate from the desktop and laptop to the datacenter at exactly the time we needed a 10X, and then another 10X, more performance to power Web 2.0. And with the third generation of the Web, which many are starting to call the multiverse, with its integrated AI and HPC and immersive experiences, innovation is happening all at once in the datacenter, at the edge, and on the clients. The metaverse will need mass customization and hyperdistribution of highly tuned compute, network, and storage to function, and it will only be affordable in this fashion.

It Is Arm’s Turn Now

Clearly, the rise of the Arm-based smartphone and the ubiquity of the Arm SoC has changed all levels of infrastructure, as Hamilton correctly pointed out.

Given all of this, it is no surprise to us that companies building the massive datacenters, including AWS and Alibaba directly and others indirectly through partnerships, have been innovating at the high end alongside Arm. Bergey called out four examples of pushing performance on different vectors by Arm chip designers:

The scale of Arm server deployments is on the order of hundreds of thousands of units against a few million units per quarter, but the Arm slice is growing fast. And the success of Arm is not being driven by performance per watt in the strictest sense, as was the drive early on with Arm server designs from a decade ago. This time, it is about an Arm CPU having equivalent or better performance than an X86 CPU, and much lower cost – and about the hyperscalers, cloud builders, and telcos having the option of customizing an Arm CPU design and having their own relationships with the few remaining advanced process chip foundries. This is all about IT organizations controlling their own fate.

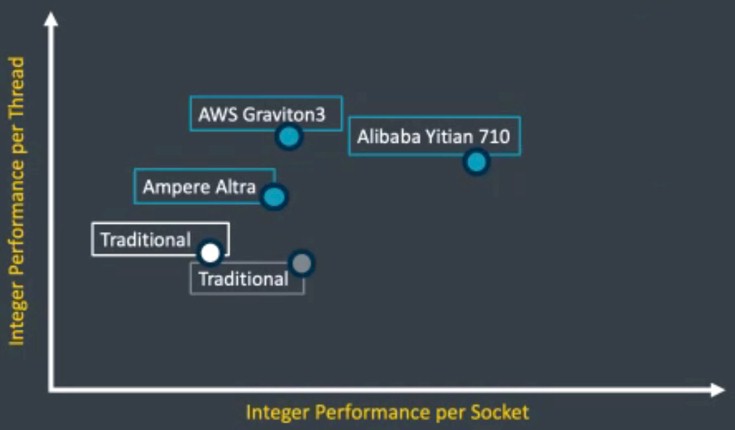

And they are doing just that, and giving Intel and AMD a run for the money. Here is how Dermot O’Driscoll, vice president of product solutions for the infrastructure group at Arm Ltd, sized up the competitive landscape with regard to servers tuned for the cloud right now at the recent Neoverse roadmap reveal:

The white dot is Intel’s “Ice Lake” Xeon SP and the gray dot is AMD’s “Milan” Epyc 7003. As you can see, AWS and Alibaba are not messing around, and neither is Ampere Computing, whose first pass Altra chip with 80 Neoverse N1 cores is shown. The Altra Max with 128 cores pushes Ampere to the right on the chart, probably further to the right than the AMD Epyc 7003, and with its homegrown cores coming later this year and then a rev coming next year, we think Ampere Computing will push both up and to the right.

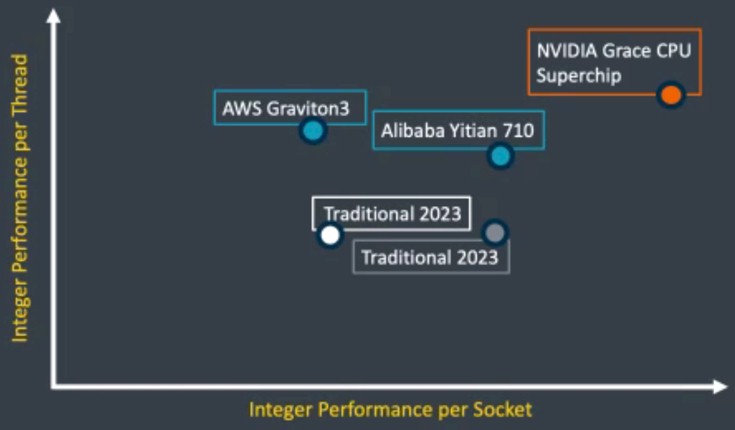

O’Driscoll did not add that to the competitive landscape chart for 2023, shown above, which is why we bring it up, but he did add the next generation “Sapphire Rapids” Xeon SPs from Intel and the next generation Epyc chips from AMD. It is not clear if the AMD chip shown is the 96-core “Genoa” or 128-core “Bergamo” chip, but to be fair given this is cloud customers, it should be Bergamo. The point O’Driscoll was making was where Nvidia’s “Grace” CPU fits in the competitive landscape, and it is up and to the right and will be the chip to beat next year. We think there is a good chance that AWS will have a Graviton4 chip out next year, too, which we fully expect it to reveal at this year’s re:Invent conference in November.

Small wonder, then, that 48 of the top 50 spenders on the AWS cloud are using Graviton, considering that the cost per unit of performance on real workloads is 30 percent to 40 percent lower than X86 instances.

If Intel and AMD can’t boost performance on both per core and per socket levels, they will have to cut price to compete – and also get the clouds to pass through the price cuts to customers. There is no guarantee that the latter will happen. Windows Server and Linux on X86 are legacy workloads, and every cloud will want to charge a premium for that. There is no incentive whatsoever for the clouds to cut those prices, especially ones that are forging their own Arm server CPUs. And so, X86 on cloud becomes the new mainframe and the new Unix server, with its pricing locked into a curve from a time gone by.

Which brings us to RISC-V. There are several people who can claim the mantle of “father of RISC,” and David Patterson, a prolific researcher and inventor, is one of them. Patterson was the leader of the Berkeley RISC project at the University of California campus of that name, which also gave birth to open source BSD Unix, the Postgres database, the Spark in-memory data analytics platform, just to name a few. Patterson is also notably vice chair of the board of directors for the RISC-V Foundation, which controls the open source RISC-V instruction set and which has moved its headquarters to Switzerland three years ago to put itself outside of the grubby and meddling hands of national governments.

If Arm’s licensable technology is a parallel to the rise of RISC/Unix, then perhaps RISC-V, which has an open source instruction set and logic blocks, is analogous to Linux. Arm may be set up for a good decade long run in the datacenter, at the edge, and in our client devices, but watch out for RISC-V. Ten years from now, we might be writing the same story all over again, with one more historical ring wave added. In fact, it is hard to imagine any other alternative on the horizon.

We’ll speak again in 2032 and see how this all turned out.